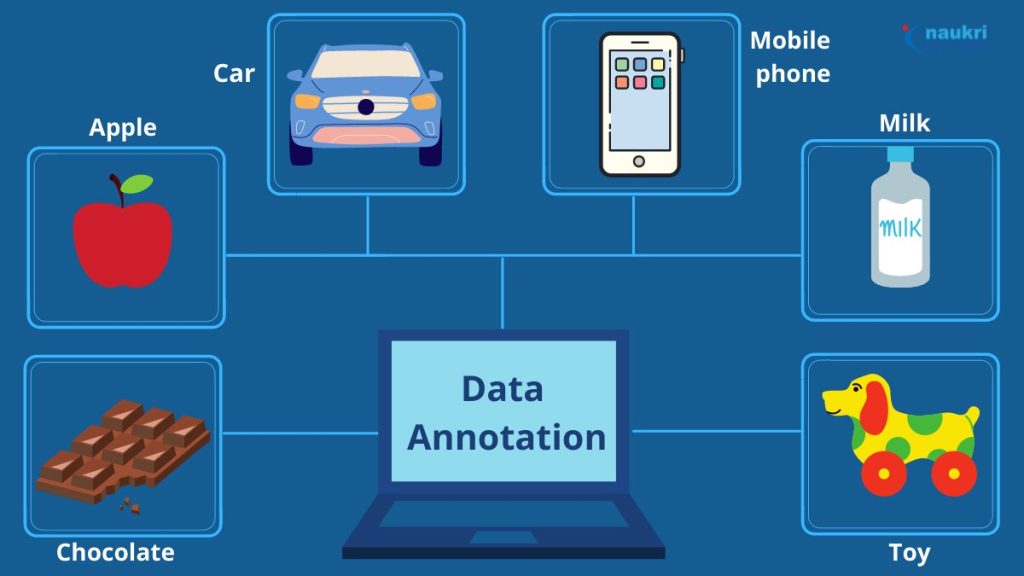

In the ever-evolving landscape of artificial intelligence (AI) and machine learning (ML), data annotation emerges as a cornerstone, essential for training AI models with precision and accuracy. But what exactly is data annotation, and why does it hold such significance in AI development? This comprehensive guide explores the depths of data annotation, from its definition to its diverse applications, tools, and future trends.

Data Annotation Defined

In the realm of artificial intelligence (AI) and machine learning (ML), data annotation serves as the linchpin, transforming raw data into a goldmine of insights and learning opportunities for machines. This chapter unfolds the essence of data annotation, elucidating its pivotal role in AI advancements and the nuances that make it a foundational element of machine learning projects.

The Essence of Data Annotation

At its core, data annotation is the process of labeling data to make it recognizable and understandable to AI models. This could involve tagging images with labels that describe their content, annotating segments of text to highlight sentiment or entities, or marking out frames in videos to identify actions or objects.

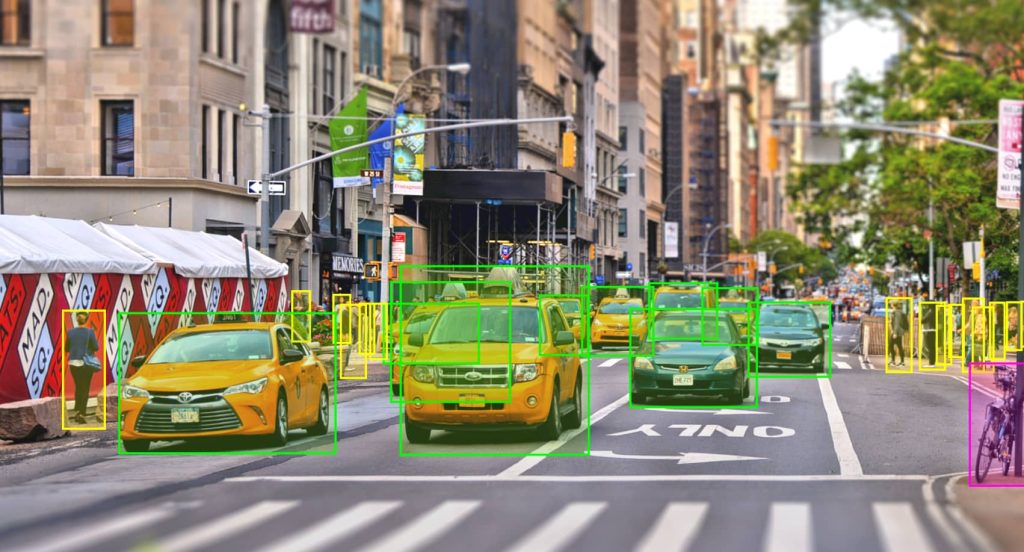

But why is this process so critical? The simple answer lies in the way AI and ML models learn. Unlike humans, machines do not possess innate understanding or intuition. They learn from patterns, and these patterns need to be identified and highlighted through data annotation. For instance, by annotating images of cars and bicycles in street scenes, an AI model can learn to differentiate between these two types of vehicles, understanding not just their visual characteristics but also their contextual relevance.

Data annotation does more than just label; it embeds context and meaning into raw data, transforming it into a rich, instructive dataset that can train AI models to perform a wide array of tasks, from simple classification to complex decision-making processes.

Why Data Annotation Matters

The significance of data annotation in AI cannot be overstated. Its impact is multifaceted, influencing not just the accuracy of AI models, but also their applicability, scalability, and efficiency. Here are a few reasons why data annotation is pivotal:

- Accuracy and Precision: The precision of data annotation directly influences the performance of AI models. Accurately annotated data ensures that models can learn with a high degree of specificity, reducing errors and enhancing reliability.

- Versatility in AI Applications: Data annotation enables the application of AI across various domains, from healthcare, where it aids in diagnosing diseases through medical imaging, to autonomous driving, where it helps vehicles understand their environment.

- Model Training and Improvement: Annotated data is used to train AI models, serving as the foundation upon which these models learn and improve over time. Through iterative training with well-annotated datasets, AI models can evolve to understand more complex patterns and make more accurate predictions.

- Human-AI Interaction: Data annotation facilitates a deeper understanding within AI models of human language, behavior, and preferences, making AI technologies more intuitive and responsive to human needs.

In essence, data annotation is the critical task that breathes life into raw data, enabling it to inform and teach AI models. It is the meticulous process of data annotation that ensures AI technologies can continue to advance, learn, and adapt to serve myriad purposes across industries and aspects of daily life.

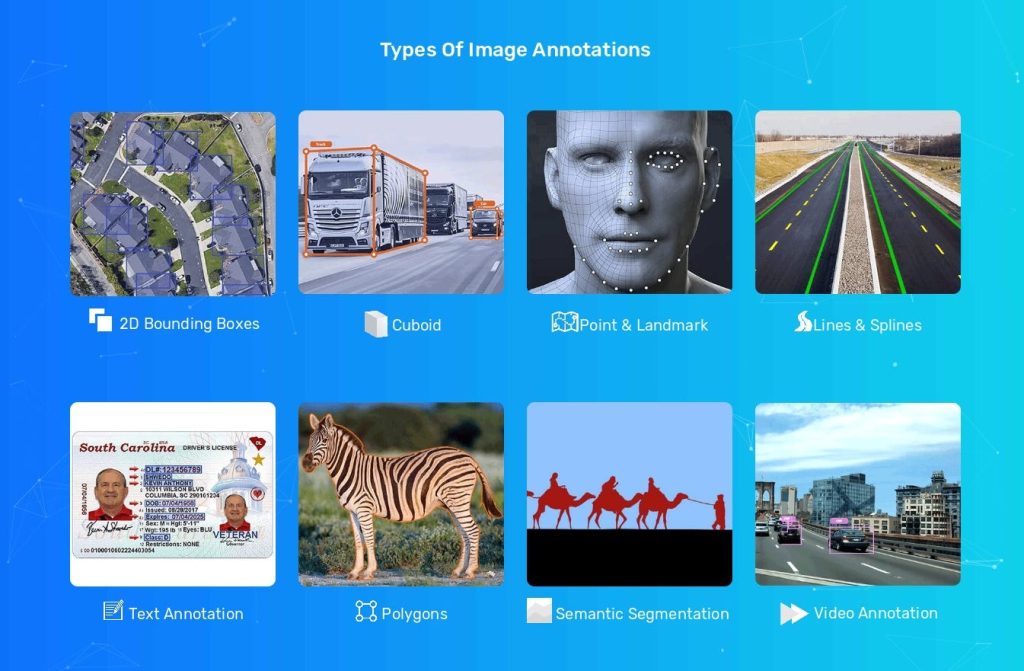

Types of Data Annotation

As the cornerstone of machine learning and AI development, data annotation must be versatile and adaptable to various data formats and requirements. This versatility is evident in the multiple types of data annotation, each suited to specific kinds of AI applications. Understanding these types is crucial for anyone involved in AI development, as the choice of annotation directly impacts the effectiveness and applicability of the resulting models.

Image and Video Annotation

Visual data, encompassing both images and videos, forms the backbone of numerous AI applications, from facial recognition systems to autonomous vehicle navigation. Annotating this data requires identifying and marking specific elements within visuals so that AI models can recognize and interpret them accurately.

- Bounding Boxes: The most common form of image annotation, bounding boxes involve drawing rectangles around objects in images to teach AI models to identify and locate these objects.

- Semantic Segmentation: This involves dividing images into pixel-wise segments to identify and classify each pixel in an image, making it essential for applications that require a deep understanding of the visual scene.

- Polygonal Annotations: For objects with irregular shapes, polygonal annotations are used, allowing for more precision than bounding boxes by tracing the exact outline of an object.

- Keypoint and Landmark Annotation: Used in facial recognition and animation, this type involves marking specific points on an object to define its shape or capture movement in videos.

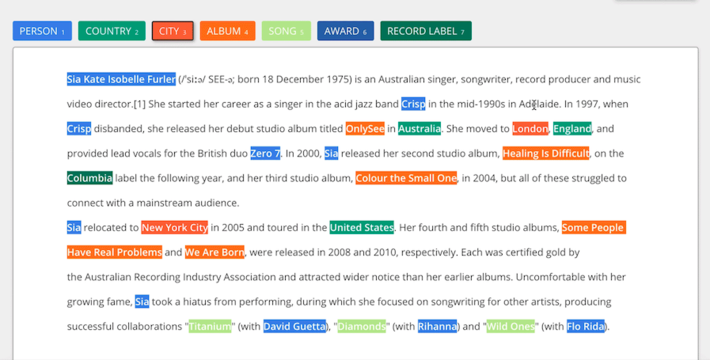

Text Annotation

Text annotation is pivotal for natural language processing (NLP) applications, enabling AI models to understand and generate human language. It involves labeling parts of the text to highlight its structural, syntactical, and semantic features.

- Sentiment Analysis: This involves labeling text to reflect sentiments expressed, helping AI models understand and predict emotional responses.

- Entity Recognition: By annotating names, places, dates, and other entities in text, models can learn to extract meaningful information from unstructured text data.

- Text Classification: This general annotation type involves categorizing text into predefined groups, aiding in content filtering, spam detection, and more.

Audio Annotation

Audio data annotation is essential for voice recognition, speech-to-text, and other auditory applications. It involves transcribing audio files and labeling the transcriptions with relevant information such as speaker identification or emotional tone.

- Transcription: Converting speech to text, which is then used to train AI models to understand spoken language.

- Sound Classification: Identifying and categorizing sounds within an audio clip, useful in various applications, from surveillance systems to customer service bots.

Other Types of Annotation

While image, text, and audio annotations cover a vast array of AI applications, other types of annotation cater to more specialized needs:

- 3D Point Cloud Annotation: For depth perception in autonomous vehicles and augmented reality (AR) applications, 3D point clouds are annotated to represent the real-world spatially.

- Video Frame Annotation: Similar to image annotation but applied frame-by-frame in videos, enabling models to understand and predict motion and change over time.

Each type of data annotation plays a crucial role in the development and refinement of AI models, tailored to the specific demands of various applications. The choice of annotation type depends on the nature of the data and the desired outcome of the AI application, underlining the importance of a nuanced understanding of data annotation practices.

Tools of the Trade

With the diverse types of data annotation detailed in the previous chapter, it becomes evident that the right tools are crucial for efficient and accurate annotation. The landscape of data annotation tools is vast, ranging from basic free tools that cater to small-scale projects to advanced platforms designed for enterprise-level annotation tasks. This chapter explores the panorama of annotation tools, guiding you through selecting the best fit for your specific needs, including insights into the best free image annotation tools and the features that distinguish the most effective platforms.

Choosing the Right Data Annotation Tool

Selecting an appropriate data annotation tool is a pivotal decision that can significantly impact the efficiency and outcome of your AI project. Factors to consider include:

- Data Type Compatibility: Ensure the tool supports the specific types of data (images, text, audio, etc.) your project involves.

- Annotation Features: Look for tools that offer the annotation functionalities needed for your project, such as bounding boxes, semantic segmentation, or entity recognition.

- User Interface and Experience: A user-friendly interface can greatly enhance annotator productivity and reduce the learning curve.

- Collaboration Capabilities: For projects involving teams, collaboration features such as task assignment, progress tracking, and review workflows are essential.

- Scalability and Performance: Consider the tool’s ability to handle large datasets efficiently, especially for enterprise-level projects.

- Integration and Export Options: The tool should seamlessly integrate with your existing workflows and support easy export of annotated data in formats compatible with your AI models.

Best Free Image Annotation Tools

For many projects, especially those in early stages or with limited budgets, free image annotation tools can be a valuable resource. Some of the top-rated free tools include:

- LabelImg: Widely used for bounding box annotations, LabelImg is open-source and offers a straightforward interface for image annotation.

- CVAT (Computer Vision Annotation Tool): This web-based tool supports a variety of annotation types, including bounding boxes, polygons, and semantic segmentation, making it suitable for more complex projects.

- MakeSense.ai: A versatile, user-friendly online tool that supports multiple annotation types and is ideal for smaller teams or individual researchers.

These tools, while free, offer robust functionalities that can meet the needs of many data annotation projects, providing a solid starting point for teams and individuals looking to annotate images without investing in premium software.

Premium Annotation Platforms

For larger or more complex projects, premium data annotation platforms offer advanced features and dedicated support to streamline the annotation process. These platforms often include AI-assisted annotation features, extensive collaboration tools, and enhanced data management capabilities. Some of the leading premium platforms are:

- SuperAnnotate: Offering a comprehensive suite of annotation tools and AI-assisted features, SuperAnnotate is designed to improve annotation speed and accuracy for teams of all sizes.

- Labelbox: Known for its intuitive interface and powerful project management features, Labelbox facilitates efficient team collaboration and data management.

- V7 Darwin: Specializing in image and video annotation, V7 Darwin provides advanced AI tools that significantly reduce manual annotation efforts, making it ideal for projects requiring high volumes of visual data processing.

Future Trends in Annotation Tools

The future of data annotation tools is closely tied to advancements in AI and machine learning, with several key trends emerging:

- AI-Assisted Annotation: Increasingly, tools are incorporating AI algorithms to automate parts of the annotation process, reducing the time and effort required for manual annotation.

- Integrated Data Management: Tools are evolving to offer more sophisticated data management capabilities, enabling better organization, filtering, and utilization of annotated datasets.

- Collaboration and Remote Work Features: As remote work becomes more common, annotation tools are expanding their collaboration features to support distributed teams more effectively.

By carefully selecting the right data annotation tool, AI and ML projects can achieve higher efficiency, accuracy, and overall success. Whether opting for a free tool for basic projects or investing in a premium platform for more complex needs, the choice of tool should align with the project’s specific requirements and goals. In the next chapter, we’ll explore the challenges associated with data annotation and provide strategies for navigating these hurdles effectively.

Navigating Data Annotation Challenges

Data annotation, while fundamental to the development of accurate and reliable AI models, is fraught with challenges. These range from ensuring high-quality annotations to managing large datasets and addressing ethical concerns. This chapter outlines common hurdles in the data annotation process and provides strategic insights into overcoming them, ensuring your data annotation efforts are both efficient and effective.

Ensuring High-Quality Annotations

The accuracy of AI models is directly tied to the quality of the data they’re trained on, making high-quality annotations paramount. However, achieving consistently high-quality annotations can be challenging due to subjective interpretations, annotator fatigue, and the complexity of the data.

Strategies for Quality Assurance:

- Clear Guidelines: Develop comprehensive annotation guidelines to ensure consistency and reduce subjective interpretations.

- Annotator Training: Provide thorough training for annotators, including examples of high-quality annotations and common pitfalls.

- Quality Control Processes: Implement multi-tier quality control processes, such as peer reviews and expert validations, to catch and correct errors.

Managing Large Datasets

AI and ML projects often require vast amounts of annotated data, making dataset management a significant challenge. Efficiently handling, storing, and accessing large datasets is critical for maintaining project timelines and ensuring data integrity.

Effective Dataset Management Tips:

- Data Management Tools: Utilize data management software to organize and categorize data, making it easier to access and annotate.

- Incremental Annotation: Break the dataset into manageable chunks and annotate incrementally, which helps maintain consistency and quality.

- Automated Pre-annotation: Employ tools that offer AI-assisted pre-annotation to expedite the process, requiring annotators only to review and adjust the annotations.

Annotator Bias and Variability

Annotator bias and variability can introduce inconsistencies in the data, affecting the model’s ability to learn accurately. This is particularly prevalent in subjective tasks like sentiment analysis or when annotating complex scenarios.

Minimizing Annotator Bias:

- Diverse Annotation Teams: Include annotators from varied backgrounds to offset individual biases.

- Blind Annotation: Implement blind annotation processes where annotators are unaware of each other’s work, reducing the influence of previous annotations.

- Regular Calibration Sessions: Conduct sessions where annotators discuss challenging cases and align on best practices, reducing variability.

Addressing Ethical and Privacy Concerns

Data annotation often involves sensitive or personal information, raising ethical and privacy concerns. Ensuring data protection and ethical annotation practices is not only a legal requirement in many jurisdictions but also a moral imperative.

Ethical Annotation Practices:

- Anonymization: Anonymize data to protect personal information, ensuring that identifiers are removed or obscured.

- Consent and Transparency: Where possible, obtain consent for the use of data, and be transparent about how annotated data will be used.

- Compliance with Regulations: Adhere to data protection regulations such as GDPR or CCPA, implementing practices and protocols that comply with legal standards.

Balancing Cost and Quality

Cost is a perennial concern in data annotation, with a need to balance the budget against the requirement for high-quality data. Achieving this balance requires strategic planning and the optimization of resources.

Cost-Effective Annotation Strategies:

- Leverage AI-Assisted Annotation: Reduce manual annotation efforts by using AI tools for pre-annotation, focusing human efforts on verification.

- Outsource Wisely: Consider outsourcing to specialized annotation firms that can offer economies of scale, but ensure they adhere to your quality and ethical standards.

- Optimize Annotator Efficiency: Implement tools and workflows that enhance annotator productivity, reducing the time and cost of annotation without compromising quality.

Data annotation is a complex, multifaceted process integral to the success of AI projects. By acknowledging and strategically addressing the challenges outlined in this chapter, organizations can enhance the efficiency and effectiveness of their data annotation efforts. The key lies in a balanced approach that prioritizes quality, ethical practices, and cost-efficiency, ensuring the development of robust, reliable AI models.

Data Annotation Best Practices

Optimizing the data annotation process is crucial for developing accurate and reliable AI models. Adhering to best practices not only ensures high-quality data annotation but also streamlines workflows, enhances efficiency, and mitigates potential risks. This chapter delves into the essential practices that should guide your data annotation efforts, from the inception of a project through to its completion.

Establishing Clear Annotation Guidelines

One of the foundational steps in any data annotation project is the creation of clear, comprehensive annotation guidelines. These guidelines serve as the blueprint for annotators, ensuring consistency and accuracy across the dataset.

Key Elements of Effective Guidelines:

- Detailed Descriptions: Provide explicit instructions for every annotation task, including examples and exceptions.

- Visual Examples: Incorporate annotated examples to illustrate guidelines, helping annotators understand the expected outcomes.

- Iterative Updates: Regularly review and update the guidelines based on annotator feedback and project evolution to address any ambiguities or challenges encountered.

Training and Supporting Annotators

The success of data annotation heavily relies on the skill and understanding of the annotators. Investing in their training and ongoing support is essential for maintaining high-quality annotations.

Strategies for Annotator Engagement:

- Comprehensive Training: Beyond initial training, offer ongoing education sessions to cover advanced scenarios and updates to guidelines.

- Feedback Loops: Establish mechanisms for annotators to provide feedback on guidelines and tools, and address their queries promptly to foster a supportive environment.

- Recognition and Motivation: Recognize and reward high-quality work to motivate annotators, encouraging a culture of excellence and dedication.

Implementing Quality Control Measures

Quality control is vital for ensuring the integrity and reliability of annotated data. Implementing multi-tiered quality control measures can significantly reduce errors and improve dataset accuracy.

Effective Quality Control Techniques:

- Peer Review: Incorporate a peer review stage where annotations by one annotator are reviewed by another for accuracy and adherence to guidelines.

- Expert Review: Have a domain expert periodically review a random sample of annotations to catch complex errors that might be overlooked by general annotators.

- Automated Validation Checks: Use software tools to automatically flag potential inconsistencies or deviations from annotation guidelines for review.

Leveraging Technology and Tools

Advancements in AI and machine learning offer valuable tools that can enhance the efficiency and effectiveness of the data annotation process.

Technological Enhancements:

- AI-Assisted Annotation: Utilize AI tools for pre-annotation, significantly reducing manual effort by providing annotators with a suggested starting point.

- Collaboration Platforms: Adopt platforms that facilitate easy collaboration and communication among annotators, project managers, and domain experts.

- Data Management Systems: Implement robust data management systems to organize, store, and easily retrieve annotated datasets, supporting scalability and accessibility.

Prioritizing Data Security and Privacy

In projects involving sensitive or personal information, safeguarding data security and privacy becomes paramount. Ensuring compliance with legal standards and ethical considerations is crucial for protecting individuals’ rights and maintaining public trust.

Data Protection Strategies:

- Anonymization Techniques: Apply data anonymization techniques rigorously to remove or obscure personal identifiers.

- Secure Annotation Platforms: Choose annotation tools and platforms that comply with data protection regulations and employ strong security measures.

- Regular Compliance Audits: Conduct audits to ensure ongoing compliance with data protection laws and ethical guidelines, adapting practices as necessary.

AI-Driven Annotation Techniques

The integration of AI into the data annotation process is not just an innovation; it’s becoming a necessity. AI-driven annotation techniques can significantly reduce the time and effort required for manual annotation, increasing efficiency and accuracy.

Emerging AI-Driven Techniques:

- Automated Object Detection: AI models that can automatically detect and label objects in images and videos are becoming more sophisticated, offering near-human levels of accuracy.

- Natural Language Processing for Text Annotation: NLP technologies are evolving to understand context and sentiment more deeply, automating the annotation of textual data with high precision.

- Audio Recognition Advances: Advances in audio recognition enable more accurate transcription and categorization of sounds, from human speech to environmental noises.

The Integration of Synthetic Data

Synthetic data generation is a burgeoning field that offers the promise of unlimited, highly varied datasets without the traditional constraints of data collection and annotation.

Benefits and Challenges of Synthetic Data:

- Diverse Training Data: Synthetic data can be generated to cover rare or underrepresented scenarios, enhancing the robustness of AI models.

- Privacy Compliance: Generating data that mimics real-world patterns without containing personal information alleviates privacy concerns.

- Accuracy and Realism Concerns: Ensuring that synthetic data accurately reflects real-world complexities remains a challenge, requiring ongoing refinement of generation techniques.

Collaborative and Crowdsourced Annotation Models

Crowdsourcing and collaborative annotation platforms are democratizing data annotation, allowing more people to contribute to AI development. This approach leverages the power of the community to annotate large datasets quickly and cost-effectively.

Enhancing Collaboration and Crowdsourcing:

- Quality Control Mechanisms: Developing effective quality control and validation systems is essential to ensure the reliability of crowdsourced annotations.

- Incentive Structures: Creating fair and motivating incentive structures is crucial to attract and retain a diverse group of annotators.

- Community Engagement: Fostering a sense of community and purpose among contributors can enhance the quality and efficiency of collaborative annotation efforts.

Ethical Considerations and Bias Mitigation

As data annotation directly influences AI behavior, ethical considerations and bias mitigation remain critical concerns. Ensuring fairness and inclusivity in AI models requires conscious efforts in the data annotation process.

Strategies for Ethical Annotation:

- Diverse Data and Annotator Teams: Emphasizing diversity in both datasets and annotator teams can help mitigate biases in AI models.

- Transparency and Accountability: Maintaining transparency about data sources, annotation methodologies, and bias checks is key to ethical AI development.

- Ongoing Bias Audits: Regularly auditing AI models for biases and revising datasets and annotation guidelines accordingly is essential for responsible AI.

Also Read: “how to bypass character ai filter?”

Conclusion

From the meticulous detailing of various types of annotations to exploring the tools that facilitate this essential work, and navigating through the challenges and best practices, we’ve seen how integral and complex the task of annotating data is. Looking ahead, the future of data annotation is vibrant, driven by technological innovations, ethical considerations, and a collective move towards more collaborative and inclusive practices. As AI continues to evolve and integrate into every aspect of our lives, the importance of data annotation cannot be overstated. It is the unsung hero of AI advancements, a critical process that transforms raw data into the fuel that powers intelligent systems. By embracing the evolving landscape of data annotation, we not only enhance the capabilities of AI but also ensure its development is guided by principles of accuracy, fairness, and inclusivity. As we stand on the brink of new discoveries and advancements in AI, the role of data annotation—as both a challenge and an opportunity—remains a testament to the collaborative effort between humans and machines, driving us towards a future where technology enhances every aspect of human life.

![How to Pause Location on Find My iPhone Without Them Knowing? [2024] 24 how to pause location on find my iphone](https://izood.net/wp-content/uploads/2024/10/How-to-Pause-Location-on-Find-My-iPhone-Without-Them-Knowing-400x300.png)

![How To Inspect Element on iPhone [4 Methods] 27 how to inspect element on iphone](https://izood.net/wp-content/uploads/2024/10/how-to-inspect-element-on-iphone-3-400x300.png)